Content

SUPER ODOMETRY: Resilient Odometry

via Hierarchical Adaptation

News

Super Odometry selected as a top feature article on Science Robotics

Summary

Performance Summary

"The goal of super odometry is to achieve resilience, adaptation, and generalization across all-degraded environments"

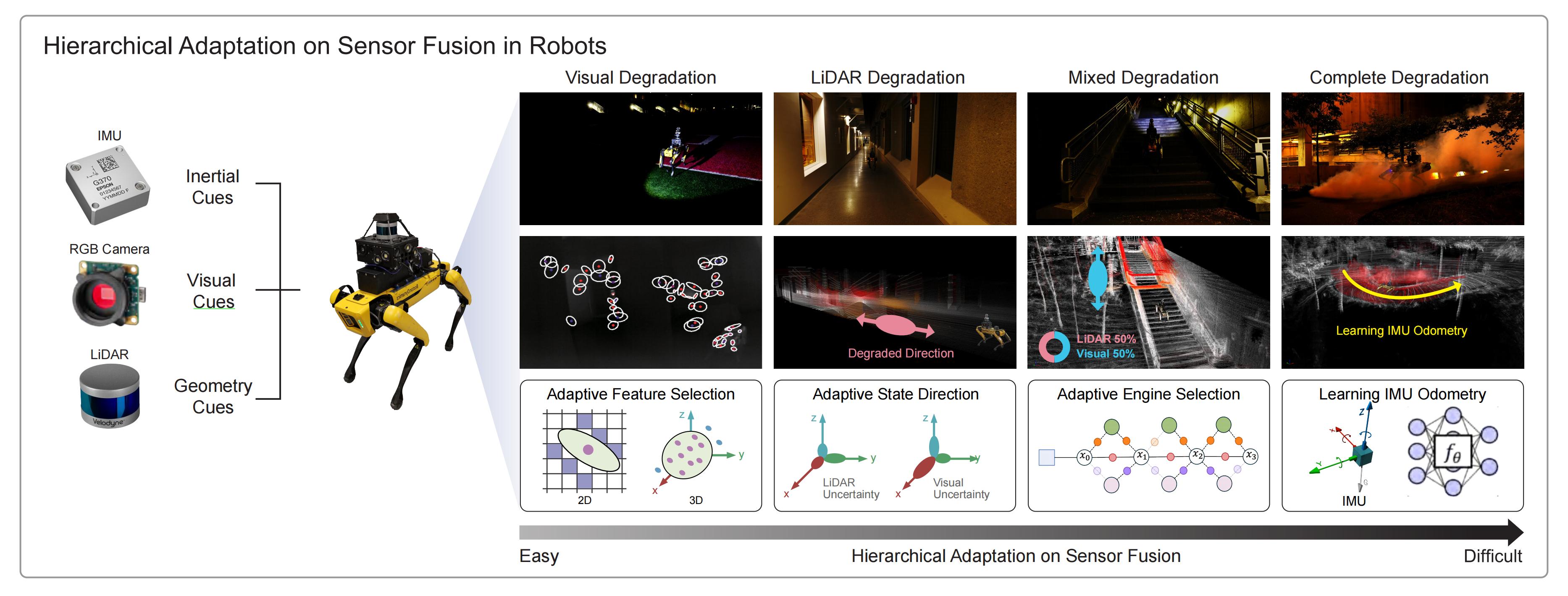

Method Summary

"For decades, odometry and SLAM have relied heavily on external sensors such as cameras and LiDAR. Super Odometry rethinks this paradigm by elevating inertial sensing to the core of state estimation, enabling robots to maintain reliable motion awareness even under extreme motion and severe perception degradation".

Motivation

When we walk through smoke or darkness, our body still knows where we are. This innate sense of motion, guided by vestibular and inertial perception known as path integration, reveals a profound truth: robust motion tracking begins not with vision, but with the body's internal sensing of movement.

Followed by this insight, we believe robotics systems also need a complementary sensing mechanism as "internal sense". Specifically, we developed a learned inertial module that learns robotics internal dynaimics and provides motion prior as fallback solution when external sensors like LiDAR and camera become unreliable. A key design goal behind Super Odometry is to unify resilience, adaptation, and generalization within a single odometry system.

Method

We propose a reciprocal fusion that combines traditional factor graph with the learned inertial module.

Nominal Conditions: The IMU network learns motion patterns from free pose labels generated by a lower-level factor graph.

Degraded Conditions: The learned IMU network take over, leveraging captured motion dynamics to maintain reliable state estimation when external perception fails.

In this way, robustness becomes adaptive, evolving with the robot’s operating conditions.

To balance efficiency and robustness, the system uses a multi-level scheme to manage environmental degradation:

Lower Levels: Provide rapid, resource-efficient adjustments for mild disturbances.

Higher Levels: Provide more complex and computationally intensive interventions to support state estimation recovery.

This layered design enables the system to maintain efficiency under nominal conditions and robustness under extreme scenarios to meet the demands of diverse environments.

The learned inertial module is trained on more than 100 hours of heterogeneous robotic platform data, capturing comprehensive motion dynamics across aerial, wheeled, and legged robots. This enables the system to provide reliable motion priors when external sensors fail. Our IMU model outperforms different specialized IMU model across various robot platforms.

Highlights

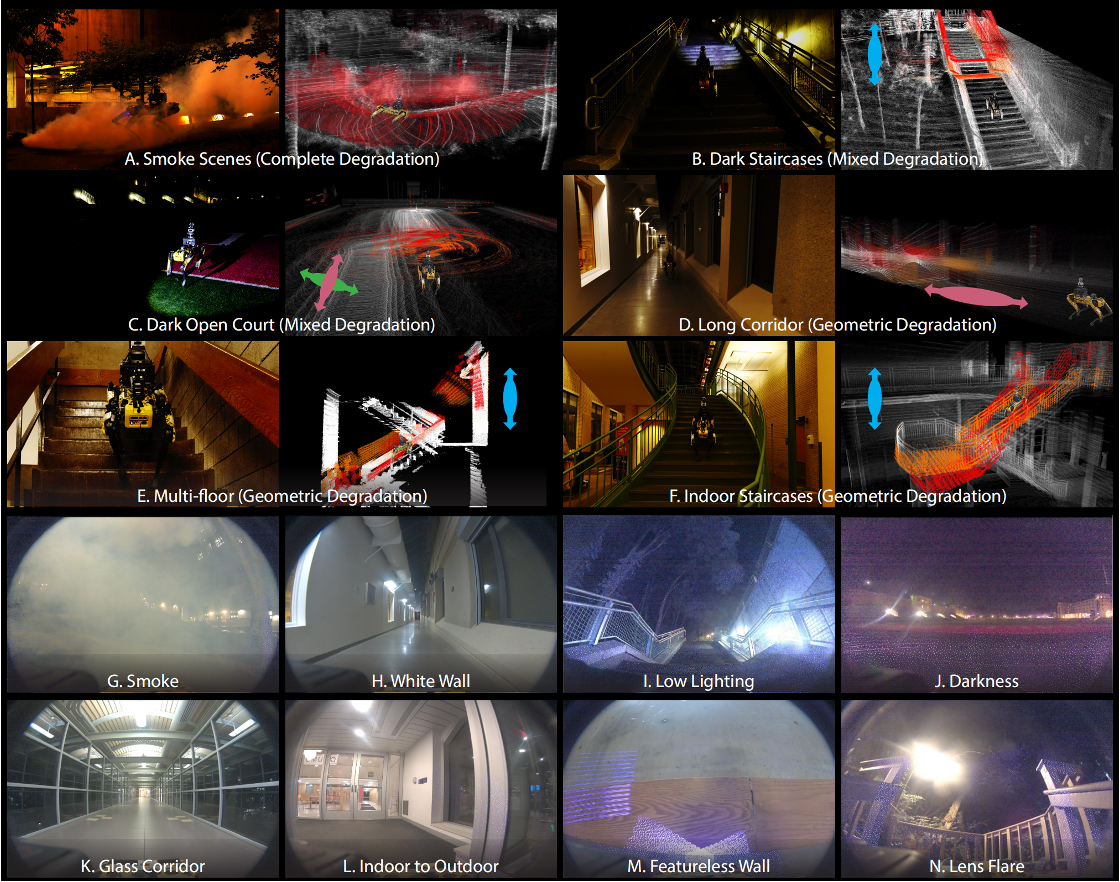

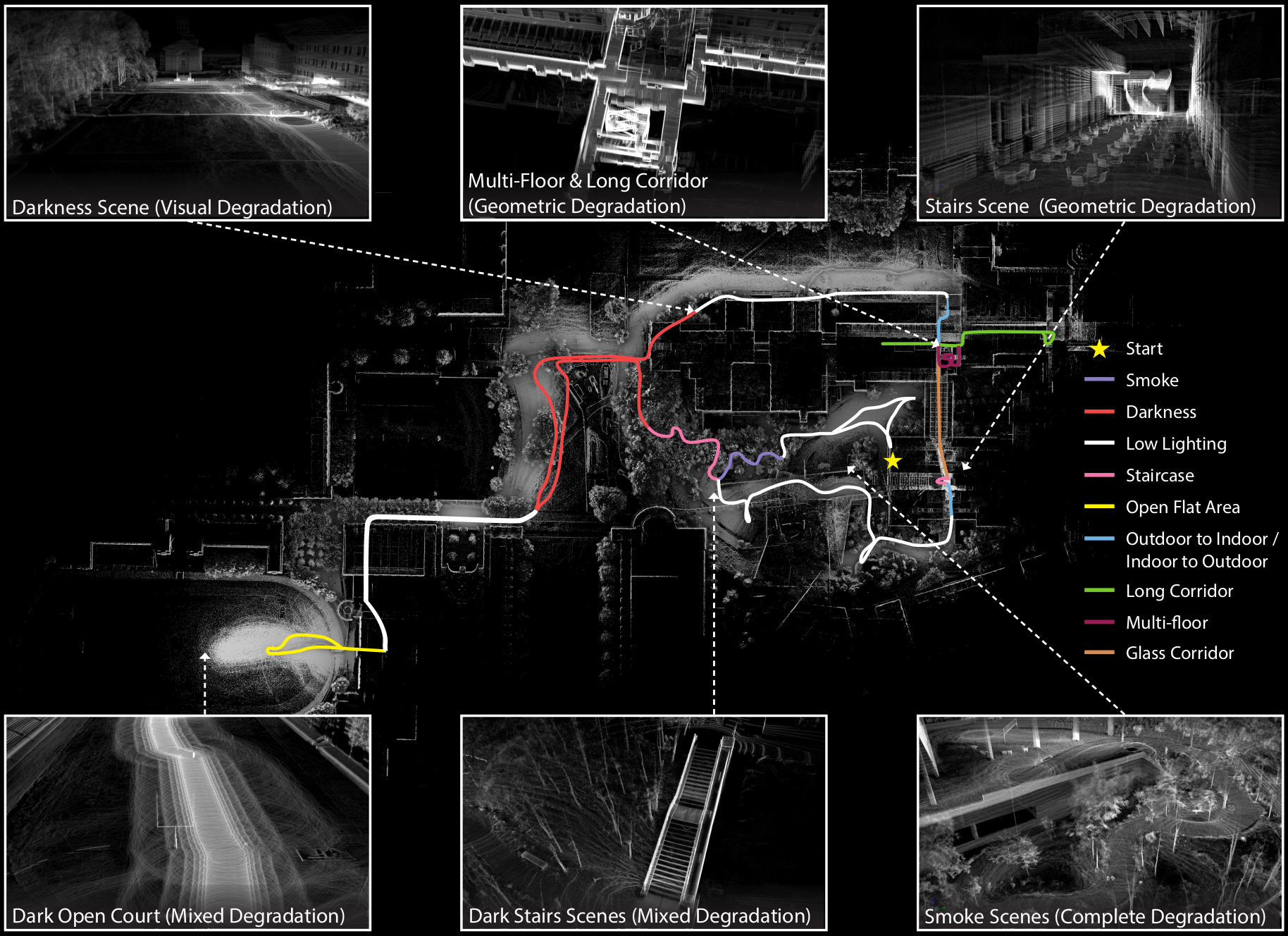

Super Odometry is evaluated under 13 consecutive types of hardware and environmental degradation in a single run including visual degradation, geometric degradation, mixed degradation and complete degradation. For more detail, please refer to this video.

The color-coded trajectory depicts our estimated odometry of a legged robot navigating through more than 13 complex degradation scenarios. Despite these difficulties, the final end-point drift was only 20 cm over a total distance of 2966 m.

Experiment Results

Stress Test: Evaluation of 13 types of degradation in a single run.

Generalization: Robust odometry across diverse conditions with various sensors and robots.

Geometry Degradation: State-direction adaptation in long corridors.

Learning Inertial Network: Performance of IMU Pretrained Model.

Fallback Solution: Robust Performance in Smoke Scenario

Onboard Performance: Robust Odometry Performance for Exploration Tasks Using Onboard Device

Limitations & Future Work

The learning-based IMU model requires faster adaptation to new robots and environments. Despite generalizing well across platforms, it struggles with unseen domains because of distribution gaps between training and testing data. Incorporating both real-world and simulated IMU data could reduce this gap and improve generalization.

Better online learning techniques are still required to achieve a balance between overfitting and catastrophic forgetting problems. We also need better strategies to achieve the switch scheme between learning IMU odometry and factor graph optimization. The current solution is a little heuristic, and we hope to convert this hierarchical adaptation into a completely learning-based solution.

Acknowledgement

Relation of Previous Work

The current journal version of Super Odometry builds upon the previously released Super Odometry v1 system. Readers are referred to Super Odometry v1 and SuperLoc for additional background and implementation details.

Manuscript Reviewers

We thank Yuheng Qiu, Michael Kaess, Sudharshan Suresh, and Shubham Tulsiani for their valuable suggestions for the manuscript.

Real-World Experiments

We sincerely appreciate the work of Raphael Blanchard, Honghao Zhu, Rushan Jiang, Haoxiang Sun, Tianhao Wu, Yuanjun Gao, Damanpreet Singh, Lucas Nogueira, Guofei Chen, Parv Maheshwari, Matthew Sivaprakasam, Sam Triest, Micah Nye, Yifei Liu, Steve Willits, John Keller, Jay Karhade, Yao He, Mukai Yu, Andrew Jong, and John Rogers for their help in real-world experiments.

Citation

@article{zhao2025resilient,

doi = {10.1126/scirobotics.adv1818},

author = {Shibo Zhao and Sifan Zhou and Yuchen Zhang and Ji Zhang and Chen Wang and Wenshan Wang and Sebastian Scherer},

title = {Resilient odometry via hierarchical adaptation},

journal = {Science Robotics},

volume = {10},

number = {109},

pages = {eadv1818},

year = {2025},

url = {https://www.science.org/doi/abs/10.1126/scirobotics.adv1818},

eprint = {https://www.science.org/doi/pdf/10.1126/scirobotics.adv1818}

}

@inproceedings{zhao2021super,

title={Super odometry: IMU-centric LiDAR-visual-inertial estimator for challenging environments},

author={Zhao, Shibo and Zhang, Hengrui and Wang, Peng and Nogueira, Lucas and Scherer, Sebastian},

booktitle={2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={8729--8736},

year={2021},

organization={IEEE}

}